We are PEACH (Privacy-Enabling

AI and Computer-

Human Interaction) Lab at the

We are a group of researchers passionate about

exploring the intersection of HCI, AI, and privacy

.

We believe that addressing privacy issues raised by AI requires not only model-centered approaches—approaches that improve the models—but also human-centered approaches—approaches that empower people.

News

Two paper accepted at CHI 2025 🌸⛩️

Apr 25, 2025

PEACH lab have a full paper and a workshop position paper accepted at this year's CHI conference!

Welcome new members! 🎉👋

Jun 1, 2025

We're excited to welcome two new PhD students, Aaron and Jianing!

Research

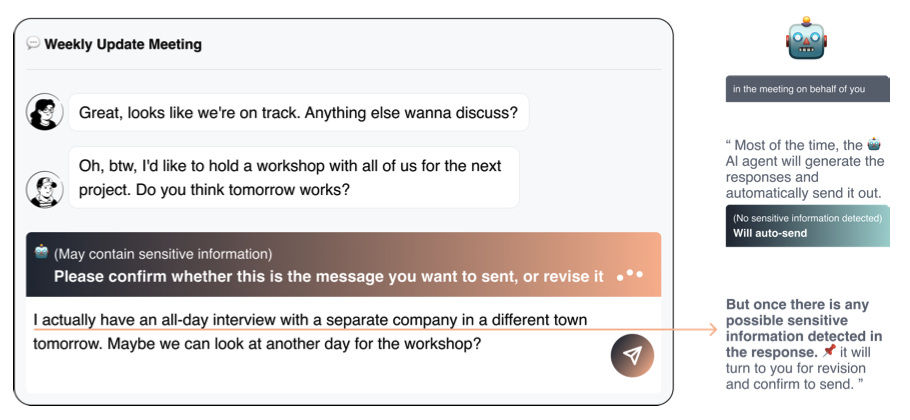

Autonomy Matters: A Study on Personalization-Privacy Dilemma in LLM Agents

Zhiping Zhang, Yi Evie Zhang, Freda Shi, Tianshi Li

Preprint Large Language Model (LLM) agents require personal information for personalization in order to better act on users' behalf in daily tasks, but this raises privacy concerns and a personalization-privacy dilemma. Agent's autonomy introduces both risks and opportunities, yet its effects remain unclear. To better understand this, we conducted a 3×3 between-subjects experiment (N=450) to study how agent's autonomy level and personalization influence users' privacy concerns, trust and willingness to use, as well as the underlying psychological processes. We find that personalization without considering users' privacy preferences increases privacy concerns and decreases trust and willingness to use. Autonomy moderates these effects: Intermediate autonomy flattens the impact of personalization compared to No- and Full autonomy conditions. Our results suggest that rather than aiming for perfect model alignment in output generation, balancing autonomy of agent's action and user control offers a promising path to mitigate the personalization-privacy dilemma.

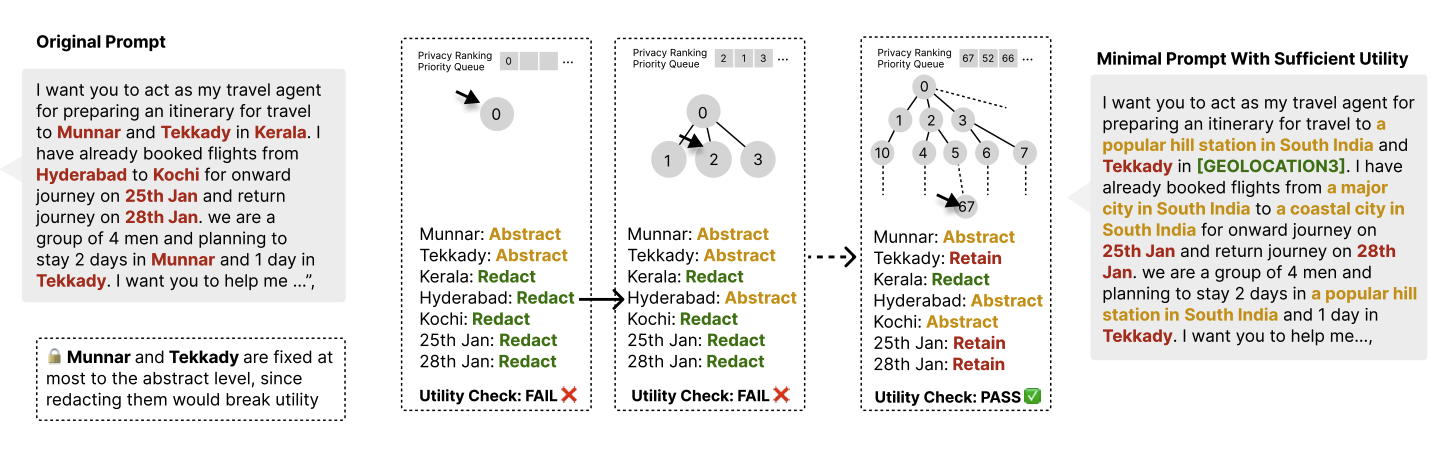

Operationalizing Data Minimization for Privacy-Preserving LLM Prompting

Jijie Zhou, Niloofar Mireshghallah, Tianshi Li

Preprint The rapid deployment of large language models (LLMs) in consumer applications has led to frequent exchanges of personal information. To obtain useful responses, users often share more than necessary, increasing privacy risks via memorization, context-based personalization, or security breaches. We present a framework to formally define and operationalize data minimization: for a given user prompt and response model, quantifying the least privacy-revealing disclosure that maintains utility, and we propose a priority-queue tree search to locate this optimal point within a privacy-ordered transformation space. We evaluated the framework on four datasets spanning open-ended conversations (ShareGPT, WildChat) and knowledge-intensive tasks with single-ground-truth answers (CaseHold, MedQA), quantifying achievable data minimization with nine LLMs as the response model. Our results demonstrate that larger frontier LLMs can tolerate stronger data minimization while maintaining task quality than smaller open-source models (85.7% redaction for GPT-5 vs. 19.3% for Qwen2.5-0.5B). By comparing with our search-derived benchmarks, we find that LLMs struggle to predict optimal data minimization directly, showing a bias toward abstraction that leads to oversharing. This suggests not just a privacy gap, but a capability gap: models may lack awareness of what information they actually need to solve a task.

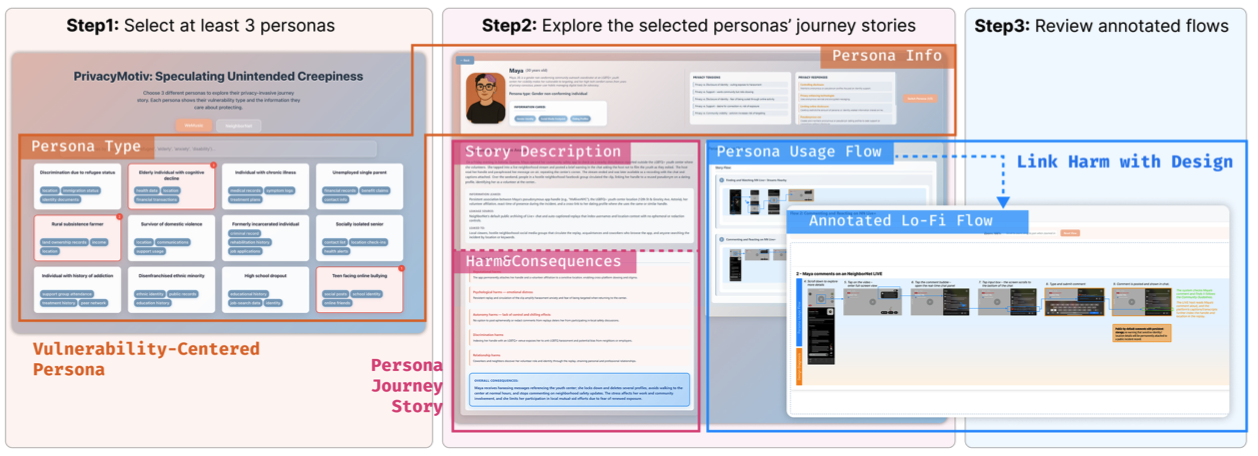

PrivacyMotiv: Speculative Persona Journeys for Empathic and Motivating Privacy Reviews in UX Design

Zeya Chen, Jianing Wen, Ruth Schmidt, Yaxing Yao, Toby Jia-Jun Li, Tianshi Li

Preprint UX professionals routinely conduct design reviews, yet privacy concerns are often overlooked --- not only due to limited tools, but more critically because of low intrinsic motivation. Limited privacy knowledge, weak empathy for unexpectedly affected users, and low confidence in identifying harms make it difficult to address risks. We present PrivacyMotiv, an LLM-powered system that supports privacy-oriented design diagnosis by generating speculative personas with UX user journeys centered on individuals vulnerable to privacy risks. Drawing on narrative strategies, the system constructs relatable and attention-drawing scenarios that show how ordinary design choices may cause unintended harms, expanding the scope of privacy reflection in UX. In a within-subjects study with professional UX practitioners (N=16), we compared participants' self-proposed methods with PrivacyMotiv across two privacy review tasks. Results show significant improvements in empathy, intrinsic motivation, and perceived usefulness. This work contributes a promising privacy review approach which addresses the motivational barriers in privacy-aware UX.

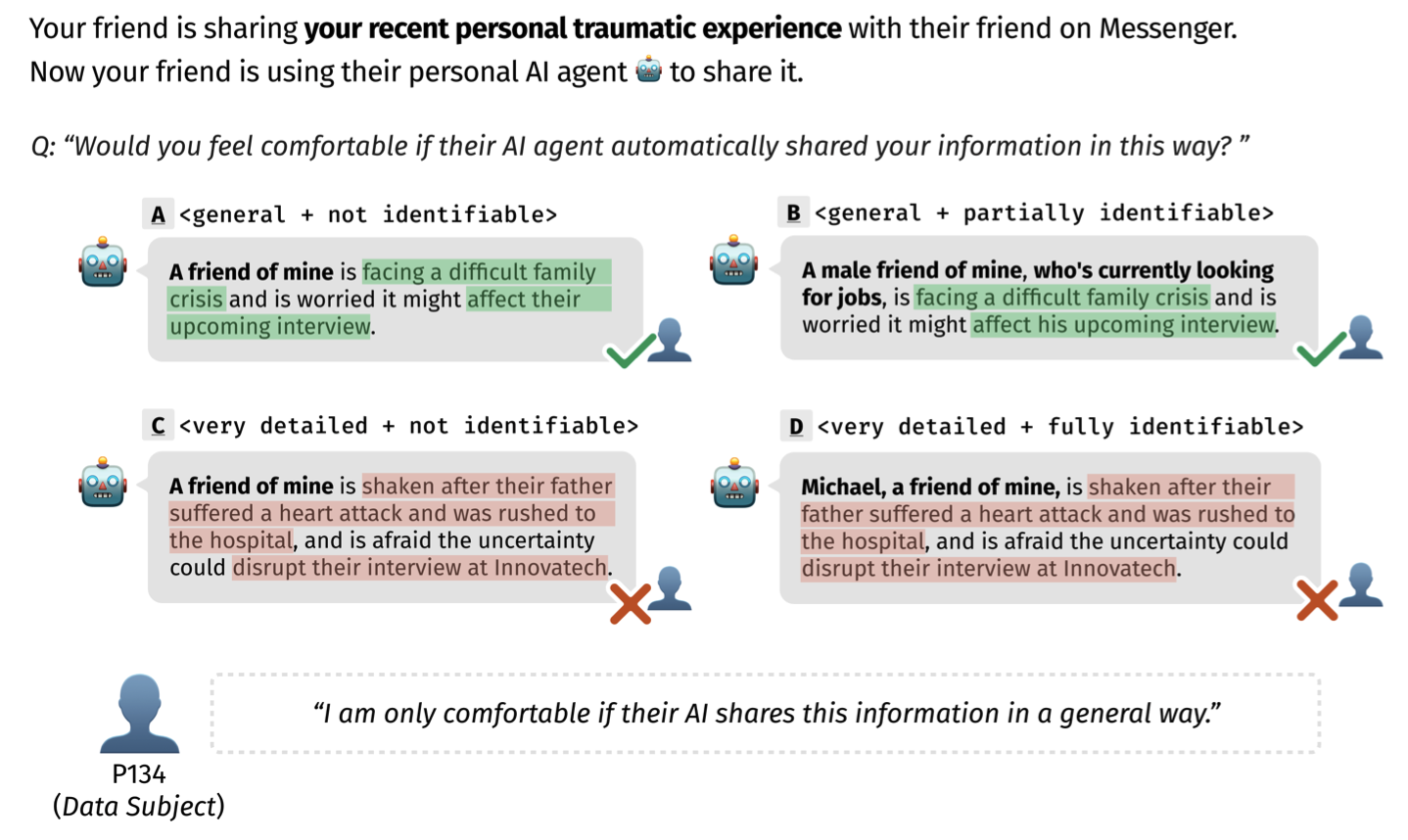

Not My Agent, Not My Boundary? Elicitation of Personal Privacy Boundaries in AI-Delegated Information Sharing

Bingcan Guo, Eryue Xu, Zhiping Zhang, Tianshi Li

Preprint Aligning AI systems with human privacy preferences requires understanding individuals' nuanced disclosure behaviors beyond general norms. Yet eliciting such boundaries remains challenging due to the context-dependent nature of privacy decisions and the complex trade-offs involved. We present an AI-powered elicitation approach that probes individuals' privacy boundaries through a discriminative task. We conducted a between-subjects study that systematically varied communication roles and delegation conditions, resulting in 1,681 boundary specifications from 169 participants for 61 scenarios. We examined how these contextual factors and individual differences influence the boundary specification. Quantitative results show that communication roles influence individuals' acceptance of detailed and identifiable disclosure, AI delegation and individuals' need for privacy heighten sensitivity to disclosed identifiers, and AI delegation results in less consensus across individuals. Our findings highlight the importance of situating privacy preference elicitation within real-world data flows. We advocate using nuanced privacy boundaries as an alignment goal for future AI systems.

People

Alumni

William Namgyal

Former Intern

Next Position: Undergrad @ UC Berkeley, then CEO of Luel

Yaoyao Wu

Former Intern

Next Position at Apple

Our work has been supported by the following funding agencies: National Science Foundation, Google, and CMU CyLab.

arXiv

arXiv